[TOC]

一个绝好的教程:https://huggingface.co/docs/transformers/tasks/language_modeling

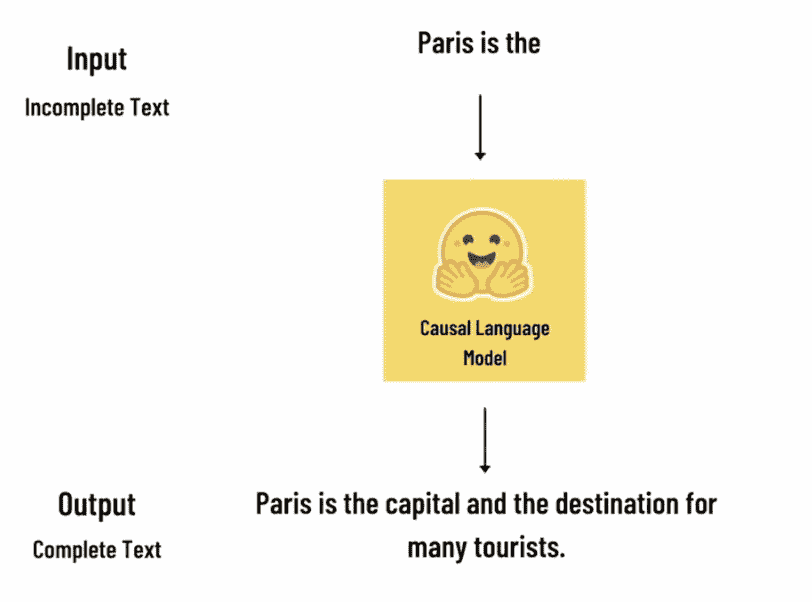

Casual Language Modeling

Causal language modeling predicts the next token in a sequence of tokens, and the model can only attend to tokens on the left. This means the model cannot see future tokens. GPT-2 is an example of a causal language model.

Metrics

- Perplexity(求一个log就是cross entropy)

- Cross Entropy

Dataset

can use any plaintexts

Usage

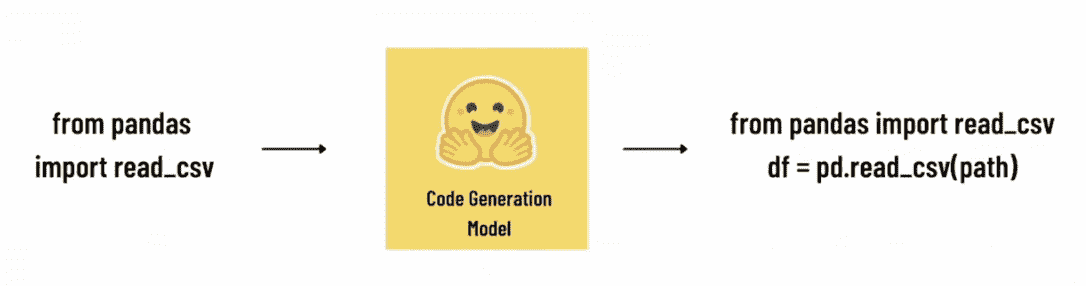

可以用来 Code Generation

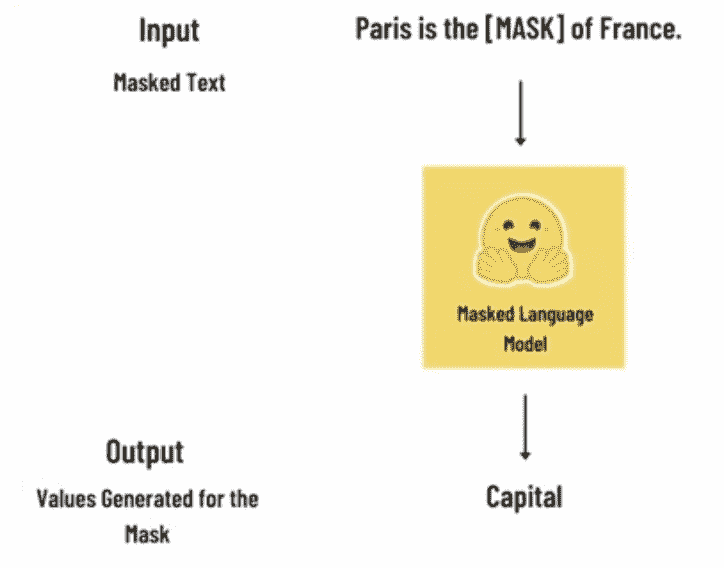

Masked Language Modeling

Masked language modeling predicts a masked token in a sequence, and the model can attend to tokens bidirectionally. This means the model has full access to the tokens on the left and right. Masked language modeling is great for tasks that require a good contextual understanding of an entire sequence. BERT is an example of a masked language model.

Metrics

- Perplexity(求一个log就是cross entropy)

- Cross Entropy

Dataset

can use any plaintexts